In my previous blog posts, I’ve been talking about containers themselves quite a bit and touched on orchestration (how containers are managed). Let’s now dive a bit into Kubernetes and discuss what’s inside that black box called a Kubernetes Cluster. This will give us some perspective on where Kubernetes fits when we talk about Tanzu in the next blog post.

Kubernetes was originally designed by Google and now maintained by the Cloud Native Computing Foundation (CNCF). Kubernetes can be deployed as infrastructure or part of a platform (PaaS) via cloud providers like AWS or VMWare’s Tanzu (which can run in AWS as well).

In the shift from monolithic applications to mini/microservices, container solutions have grown due to the many advantages they have. However, when a monolithic application becomes many microservices and each microservice can independently scale, the number of containers grows significantly. It is not uncommon for enterprises to have tens of thousands, or hundreds of thousands, of containers running at any given time. Kubernetes helps manage all those containers, but as the number of containers grows, so does the number of Kubernetes clusters. That’s where other tools and platforms, like Tanzu, step in to help manage larger clusters.

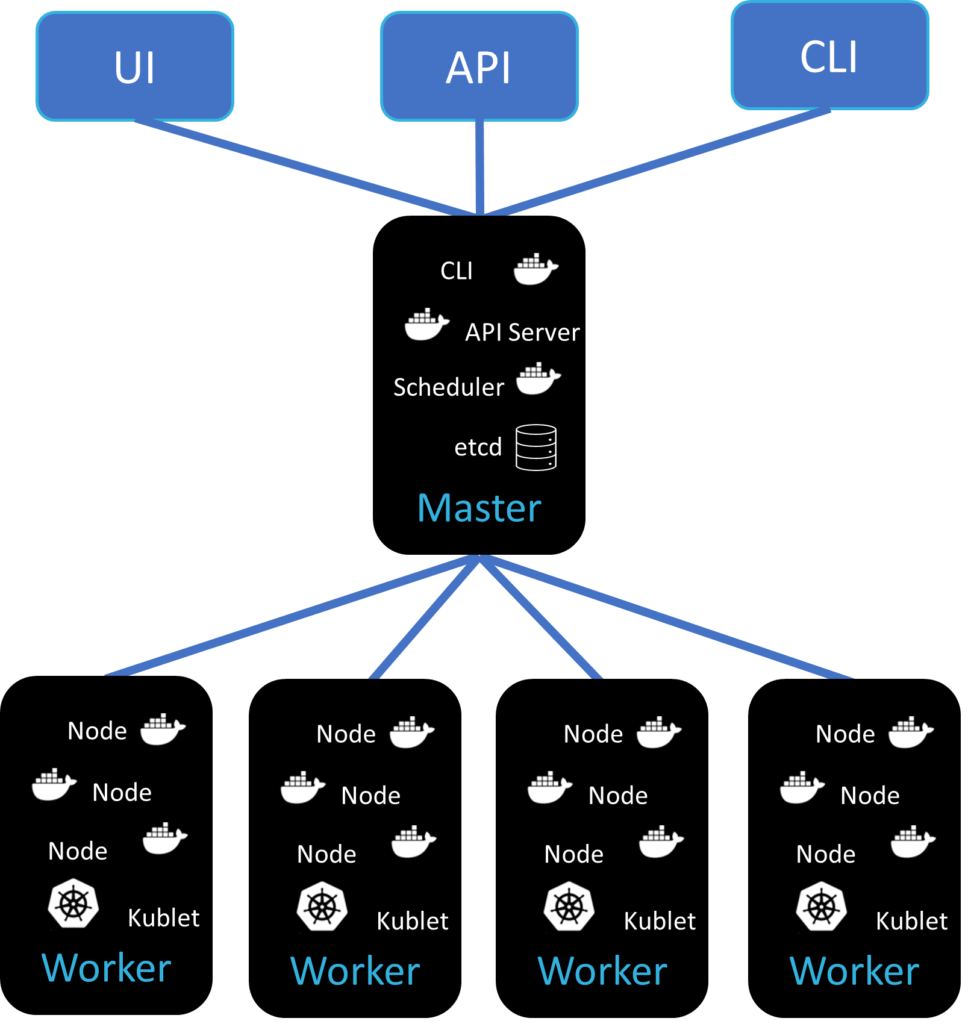

Below are the major components of Kubernetes and what they do:

Master Node

- Key Kubernetes processes that run and manage the cluster. There can be more than one for high availability

- Key Features:

- API Server: Entry point to Kubernetes cluster for the user interface, API, and CLI

- Controller Manager: Tracks and manages the containers in the cluster

- Scheduler: Determines which worker nodes will be used when based on the application being scheduled

- Etcd: A key-value store that contains the state of the cluster

- Virtual Network

- Network Proxy layer for the cluster

Worker Node

- Runs the pods and containers (inside the pods)

- Key Features:

- Kubelet

- Primary Kubernetes ‘agent’ that runs on each node

- Communicates to the Master via an API server

- Registers and manages communication to the master

- Pods

- Multiple pods per worker node

- Containers:

- Runs inside the pods

- Where the application runs as well as the OS and other resources needed by the application to run

- Kubelet

Pod

- Kubernetes component that runs multiple containers

- There are multiple pods per worker node

- Key Features:

- IP Address: Each pod has its own IP address

- Services: Associated with each pod and allows communication to the pod via services rather than IP address which can change as pods are stopped and started for load–balancing

The below diagram demonstrates how the master node, worker node, and pods all work together.

The Kubernetes conversation starts to get tricky when an organization has thousands of nodes, multiple clusters, and security issues that must be addressed. Many pure Kubernetes installations have several applications and tools in place for management and security, as well as homegrown tools and scripts. Not only must the tools and individual containers be updated, but they must all be tested and work together properly after the update.

Leveraging a container platform like Tanzu can help simplify the management of the container environment. Tested patches come through VMware ready for deployment to patch containers and the platform itself. This can be an excellent solution for many companies, especially where DevOps resources are already spread thin.

If your company has a particular situation you would like to discuss, drop a note on our Contact Us page.