In my last post, we described Virtual Machines (VM’s) and containers using real-world analogies of houses and apartments. In this post, we’ll continue to reference those analogies, but dive deeper into the technical differences in order to understand the advantages and disadvantages of containers. As a recap, we used the following analogies:

- Stand-alone House = Server

- Multifamily House = VM’s

- Apartment = Container

- Renting a house = cloud instances (AMI’s on AWS, etc.)

- AirBnB = Serverless (use for only as long as you need it)

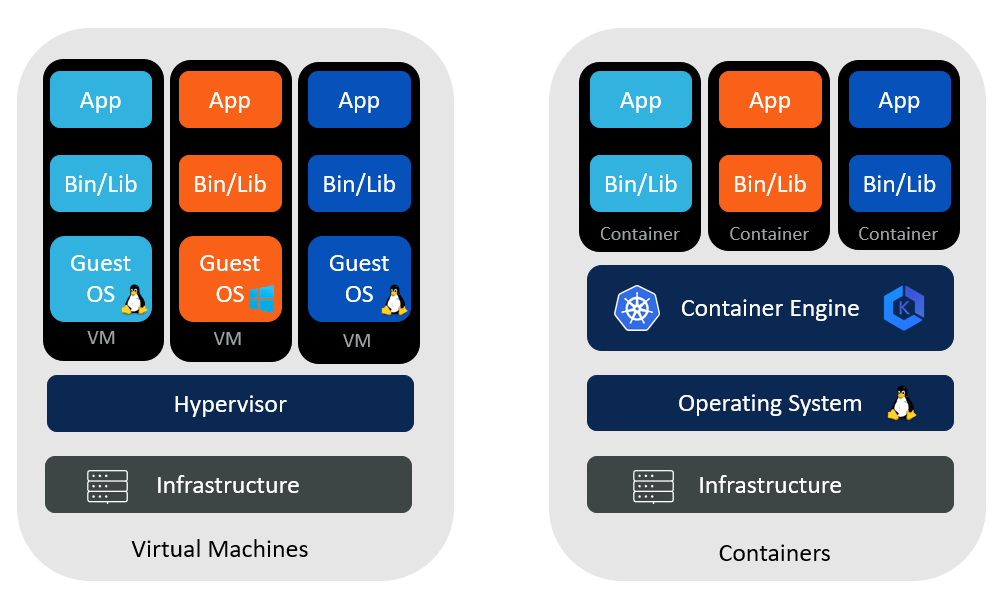

At a very high level, VM’s are virtualized at the hardware level and containers are virtualized at the OS level. Looking at the diagram below for a VM scenario, the hypervisor is a software layer (sometimes in firmware or built into the hardware) that separates or abstracts the systems’ operating system from the hardware infrastructure (disks, memory, network, CPU, etc.). It allows each virtual machine to act like it has its own infrastructure. The VMs in this scenario can each run different kinds or versions of operating systems. Comparing this to our multi-family house analogy, the servers are like the house and foundations themselves. Storage, networking, CPU are like the power, water, and electricity coming into the house as a single cable or pipe. In a multi-family house, it’s divided and metered (splitters, meters, valves, etc.) so each living area in the house has its own resources which are managed by the hypervisor. Inside the house (like the VM), you can do what you want within the limits of the resources that are available. In the container scenario, its engine sits on top of the operating system and virtualizes at that level, so each container thinks it has its own version of the operating system. This is done through an OS feature called Namespaces, where containers are running in their own namespace and achieve isolation at that level. Each container has part of the OS to itself, specifically the binaries and libraries that the application is dependent on. Some of these may be installed for the application and some are part of the OS. Comparing this to an apartment/hotel, similar to the multi-family, the infrastructure is shared. However, resources like water, heat, electricity, and other amenities (directories, pools, or elevators) are shared. In other words, more resources are shared in the container scenario with the container engine managing the resources through scheduling, load-balancing, and traffic routing. In a large hotel, think Las Vegas size, there are many elevators and floors, with more being used during peak times or seasons. When they’re less busy, management will decide if they need to shut down some elevators or use fewer floors. For containers, it’s up to the container engine and its’ orchestration layer to manage how people are routed to different places via the elevators, as well as the load-balancing by adding/removing floors in service.